The data set recorded in a "Wizard of Oz" environment is available to the research community on request. Please use the contact page to request access to the data set.

Sample data

The following videos show an extract from

the Vernissage data set.

The first video is recorded from Naos

internal camera and shows the two participants he is interacting

with. The second video shows the same scene from behind, recorded by

a common HD video camera.

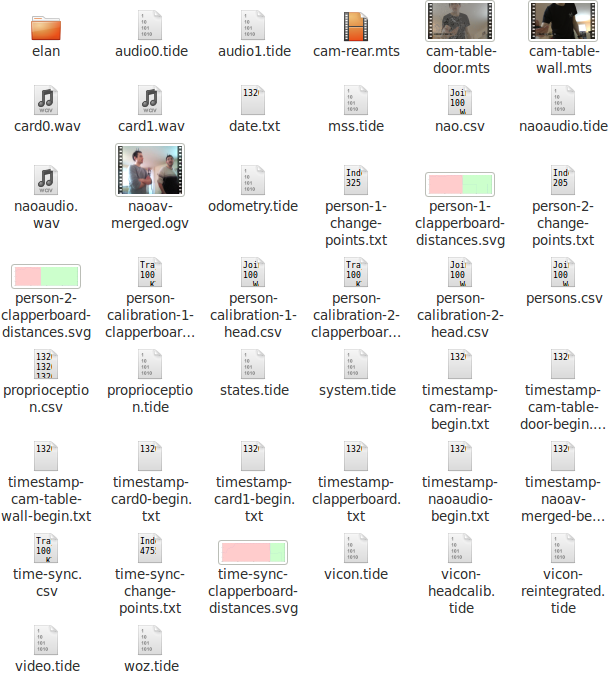

The following

screenshot will give you an overview of the data recorded within one

session:

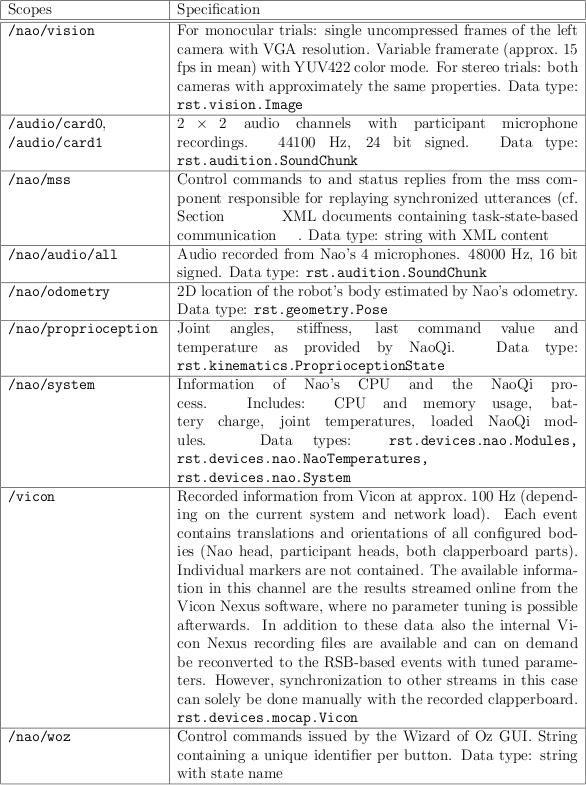

A detailed specification of all streams recorded using RSBag is

available in the following table. It lists the RSB scope on which

events for the respective stream were informed with more detailed

information of the contents. Mentioned data are taken from the RST release

1.0.

Besides the aforementioned data recorded

through the RSB middleware, the 3 external HD cameras were recorded

with 1920 × 1080 pixels video resolution at 25 fps and 48000 Hz, 16

bit signed, 5.1 channel audio.

Data formats

Besides the data being available in TIDE/RSB format, we offer an export in widley spread formats.As an example, the Vicon data has been exported as described in the following:

- Trial Time Synchronization

- clapperboard-timestamp.txt: contains the RSB timestamp of the vicon frame which contained the clapperboard event.

- time-sync-change-points.txt: The first column contains the vicon frame number of the clapperboard event.

- time-sync-clapperboard-distances.svg: a visualization of the result of the automatic clapperboard detection in the vicon data.

- time-sync.csv: Raw vicon data of the relevant trial time containing the clapperboard event, the above calculations were based on this.

- Trial data - Recorded at 100 Hz. Each line in the file represents one Vicon frame, hence 100 frames form one second of recording time.

- nao.csv: Nao's head pose

- persons.csv: Head poses of both persons

- Person Head Pose Calibration (for both persons)

- person-calibration-1-clapperboard.csv: Vicon tracking of the clapperboard marking the time at which the person looked at the spot.

- person-calibration-1-head.csv: Tracking results of the person's head.

- person-1-change-points.txt: Person's neutral pose at the time of the clapperboard (averaged over a short window to improve stability).

- person-1-clapperboard-distances.svg: visualization of the clapperboard detection.

Post-processing

The primary aims of the post-processing phase were to- synchronize the HD videos with the remaining data

- validate the synchronicity of all recorded RSB data

- export the data to the annotation tool

- re-import the generated annotation into the RSBag files.

In general, the post-processing procedure can be seen as following three basic steps. First, the channels that do not have explicit absolute timing information need to be synchronized to the rest of the timestamped data set. In a second step, we can generate views on selected parts of the data. These views can span just sub-episodes of the larger recordings and can include a subset of the recorded streams of data needed for a specific task. As a last step, we envision that annotations and similar secondary data will be reintegrated back into the original files of the data set, so it can be used e.g. in evaluating or as inputs to parts of the overall system.

Annotation

Apart from the ground truth data that is automatically generated by the recording process and the devices used in it, we have also carried out manual annotations. The Vernissage Corpus includes the following:- 2D Head location annotation

- Visual Focus of Attention annotation (VFOA)

- Nodding annotation

- Utterance annotation

- Addressee annotation