For the scenario we modeled the interaction of an art guide in a

small private vernissage setting. The robot welcomes visitors and

guides them through the vernissage by explaining several paintings

and initiating a small quiz.

By inviting naive participants for

the interaction with the robot, the data set allows to analyze the

human behavior towards the robot in a realistic interaction situation

in addition to it’s availability for benchmarking.

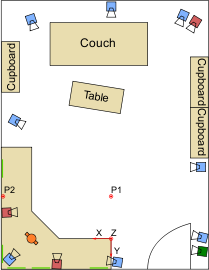

Orange: NAO, cameras: blue – Vicon, red – HD, green lines: paintings, red: Vicon coordinate system, P1 and P2 indicate calibration positions for the participants’ head orientations.

In detail, the scenario unfolded as follows:

- the visitors arrived in pairs and were greeted by the robot when they entered within a normal interacting distance. After this greeting, the robot offered some explanations about the paintings present in the vernissage.

- when the visitors agreed to this, the robot started explaining three different groups of paintings using speech and matching gestures. These explanations included pauses intended to elicit comments by the visitors and also gave them the chance to tell the robot whether they wanted to hear another explanation at specific points.

- when the explanations were finished, the robot asked the visitors if they were interested in participating in a quiz. After they agreed to this, Nao’s introduced itself and asked each participant to tell his or her name and introduce themselves.

- the robot then explained the general quiz rules which included that the visitors should discuss among themselves before giving the answers. The robot then proceeded to ask several questions about the paintings and more general topics and also judged the answers given by the participants.

- after the quiz was finished, the robot asked each visitor to decide on a favourite painting and afterwards told the participants to discuss and choose one common favourite and also to propose a new fitting name for that painting.

Prototype scenes

Besides the recordings of the vernissage scenario, several additional scenes have been recorded with variations of the scenario. While the vernissage recordings provided a basis for a more empirical evaluation in a controlled environment and scenario, these prototypical scenes serve the two general purposes:- Providing data including ground truth for examining the impact of less structure in the scenario and environment. This examination, on the one hand, helps to find perspectives for the algorithmic realization and, on the other hand, is a test of how the data set scenario can be improved for future recording sessions.

- Providing data specifically intended to examine the performance of certain algorithms with special requirements on the recorded data which could not be fulfilled in the general scenario, e.g. for mapping and localization.

Stereo Prototype Scenes

Three additional prototypical scenarios were recorded after the initial roughly synchronized access to the images from both cameras of the robot was available.The Vernissage

The original vernissage scenario was recorded again after the availability of the stereo recording solution but without naive users due to the short time frame in which the recording became possible. Two recordings were with the original setting including two people in front of the robot. A third recording included an additional person visible in the scene.

Mapping and Localization

Several recordings were made in which the robot was walking on the table in front of the paintings. Four trials were done in total that will serve to evaluate and benchmark the mapping, localization and eventually navigation algorithms developed in the project.

Speaker Tracking

To evaluate algorithms on the audio/visual detection and tracking of speaking persons, a special scene with three persons was recorded. At the beginning of this recording, all persons successively say their names, one after the other. As a second step, the positions of all three persons are exchanged. One of the persons was always seated while the other two were standing upright to have some variation both in elevation and in azimuth as seen from the robot’s perspective. This process of speaking and exchanging places is then repeated several times.

Monocular Prototype Scenes

Apart from the extra stereo recordings, some variations on the scenario were also recorded only with monocular vision directly after the initial vernissage recordings.Increased Number of People

The main scenario has additionally been performed with more people appearing in the scene. This allows to inspect the performance of tested algorithms and how they scale with the number of people.

Moving Robot

In future datasets one idea is to also include variants of the normal vernissage scenario where the robot walks from painting to painting. To provide a first testbed in this direction for algorithms, we also recorded a scene in the vernissage room where the robot walks while several people are present. This scene allows inspecting the effects of egomotion on algorithmic processing or analyzing the performance of auditory algorithms with respect to the noise produced by the robot while walking.